ClearCross is an AI-powered iOS application that enhances crosswalk safety for visually impaired individuals.

Using object detection models, the app identifies crosswalk signals in real-time and provides users with auditory and haptic feedback to safely guide navigation. The application was developed on Xcode for iOS devices.

ClearCross leverages Apple’s CoreML machine learning (ML) framework, which is efficient on devices with limited computational power. The application utilizes the device’s camera to detect crosswalk pedestrian signals, classify them as “Walk” or “Don’t Walk,” and delivers clear feedback to the user. The ML model is trained off a public dataset to ensure robustness in environments and account for regional variability in crosswalk signals. In trials, the ClearCross app demonstrated a potential to supplement or replace existing Accessible Pedestrian Signals (APS) or to become a portable solution that adapts to environments where APS infrastructure is insufficient.

Future development will focus on featuring augmented reality features to enhance user experience, expanding compatibility with Android devices, and improving ML training datasets to account for global usability. ClearCross aims to reduce the risk of accidents for visually impaired pedestrians while promoting independence in navigation.

Kelvin Orduna, Evan Quirk, Cody Schulist

Arkansas School for Mathematics, Sciences, and the Arts

February 2025

ClearCross is an AI-powered iOS application that enhances crosswalk safety for visually impaired individuals. Using object detection models, the app identifies crosswalk signals in real-time and provides users with auditory and haptic feedback to safely guide navigation. The application was developed on Xcode for iOS devices. ClearCross leverages Apple’s CoreML machine learning (ML) framework, which is efficient on devices with limited computational power. The application utilizes the device’s camera to detect crosswalk pedestrian signals, classify them as “Walk” or “DoNotWalk,” and delivers clear feedback to the user. The ML model is trained off a public dataset to ensure robustness in environments and account for regional variability in crosswalk signals. In trials, the ClearCross app demonstrated a potential to supplement or replace existing Accessible Pedestrian Signals (APS) or to become a portable solution that adapts to environments where APS infrastructure is insufficient. Future development will focus on featuring augmented reality features to enhance user experience, expanding compatibility with Android devices, and improving ML training datasets to account for global usability. ClearCross aims to reduce the risk of accidents for visually impaired pedestrians while promoting independence in navigation.

According to a World Health Organization (WHO) article from 2019, at least 2.2 billion people are visually impaired.1 There are a multitude of causes like refractive errors, cataract, diabetic retinopathy, glaucoma, age related issues, and many more. Approximately 1 billion of these cases could have been prevented or have yet to be addressed.1 A news article released by NIH (National Institute of Health) from 2016 states that visual impairment or blindness is expected to double to more than 8 million in the US alone by 2050.2 The visually impaired have a number of techniques and tools to help them navigate their surroundings.3 Despite these teachings those with a visual impairment have a higher chance of getting into an accident.4 Although there may be some accommodations in place most of them have their limitations, whether they be physical or electrical.

There have been many attempts to combat visual impairment safety concerns by the government and also by industry. In 1990, the Americans with Disabilities Act (ADA) required establishments to make curb ramps and sidewalks in all publicly accessible buildings.5 The Act also brought the implementation of truncated domes, yellow pads that signal the end of sidewalks or ramps. These warning pads work with Accessible Pedestrian Signals (APS) in crosswalks to warn the visually impaired that they are about to cross the street. APS is a safety system that is used to indicate to a visually impaired person when to cross through a combination of a pushbutton, audible and vibrotactile WALK indications, a pushbutton locator tone, a tactile arrow, and automatic volume adjustment.6 Mobile applications have also been made to give additional aid to the visually impaired. Oko is an app developed by AYES, Inc. that aids in crosswalk crossing by notifying the user when the crosswalk signal in front of them says WALK and gives detailed walking directions.7 The app is available for purchase on the Apple App Store at a significant cost to the user. The goal of ClearCross is to create an accessible resource and allow an impaired individual to take preventative measures to make crossing the road safer.

An image dataset is required to train an object-detection model. There are a few methods in making a dataset, including making our own, or choosing one from the internet. Upon searching for multiple datasets online, a dataset from Roboflow, a platform where users can each upload their own datasets for public use. The dataset, "AuralVision Computer Vision Project" from the user Merlimar was chosen as there are 2,889 images of crosswalk signals that are mostly based in the domestic United States.8 The images were exported from the Roboflow platform without the existing annotations and labels on the images. RectLabel version 2024.9.28, a Mac application, was used to annotate each image.9 The process involved highlighting only the area where the signal was present and label it as "Walk" and "DoNotWalk", with respect to the signal present in the image. If multiple signals were present in the image, they would all be highlighted in order to improve accuracy in training. Each image was then exported with a JSON file and sorted into a folder with respect to the signal(s) present in the image. A main folder was also created that had a copy of each of the nearly three-thousand images alongside the JSON file, which contained labeling information. The JSON file, alongside the images, could be taken to a machine learning framework to begin training on the images.

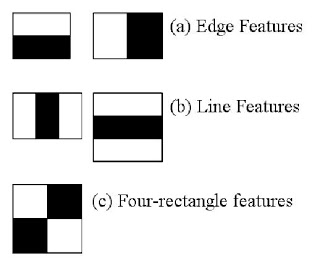

Paul Viola and Michael Jones introduced Haar feature based classifiers in 2001. The concept is to train multiple positive (contains object) and negative (doesn’t contain object) images, and then use the trained classifiers to detect the object in new images or video feed. Haar features are values calculated from subtracting the sum of pixels in black rectangles from ones in white rectangles (as shown below in Figure 1). Different sizes and positions of these rectangles create a large number of features. For example, on a 24x24 window there could be over 160,000 features recorded.10

For efficiency a calculation technique “integral image” is employed. The technique reduces pixel sum computation to a short operation involving four pixels. Some of the features recorded are irrelevant. A machine learning technique called Adaboost is used to determine which features to record. Adaboost iteratively evaluates features against training data. The features with the minimum error rate are selected and the classification is run again until the desired results are reached. This process lowers over 160,000 features to around 6,000.10 As a final improvement to efficiency features are grouped into stages. If a window fails the first stage discard it so it’s not processed again then apply the next stage and continue the process. The idea behind using haar cascade was the quick detection speed and simplicity in the process. Depending on the project there could be readily available datasets to train the haar cascade. After searching a public pretrained model was not available for crosswalk signals meaning one had to be created. As the objects the application is detecting are crosswalk signals there was difficulty in finding a large enough variation of positive and negative images to create effective results. Also when creating the custom dataset there were frequent amounts of false positives which negatively affected the accuracy of the model. This can be solved by manually adjusting the parameters. However, adjusting the parameters proved to be time consuming and repetitive as the data set repeatedly detected false positives. Therefore a decision was made to change machine learning models.

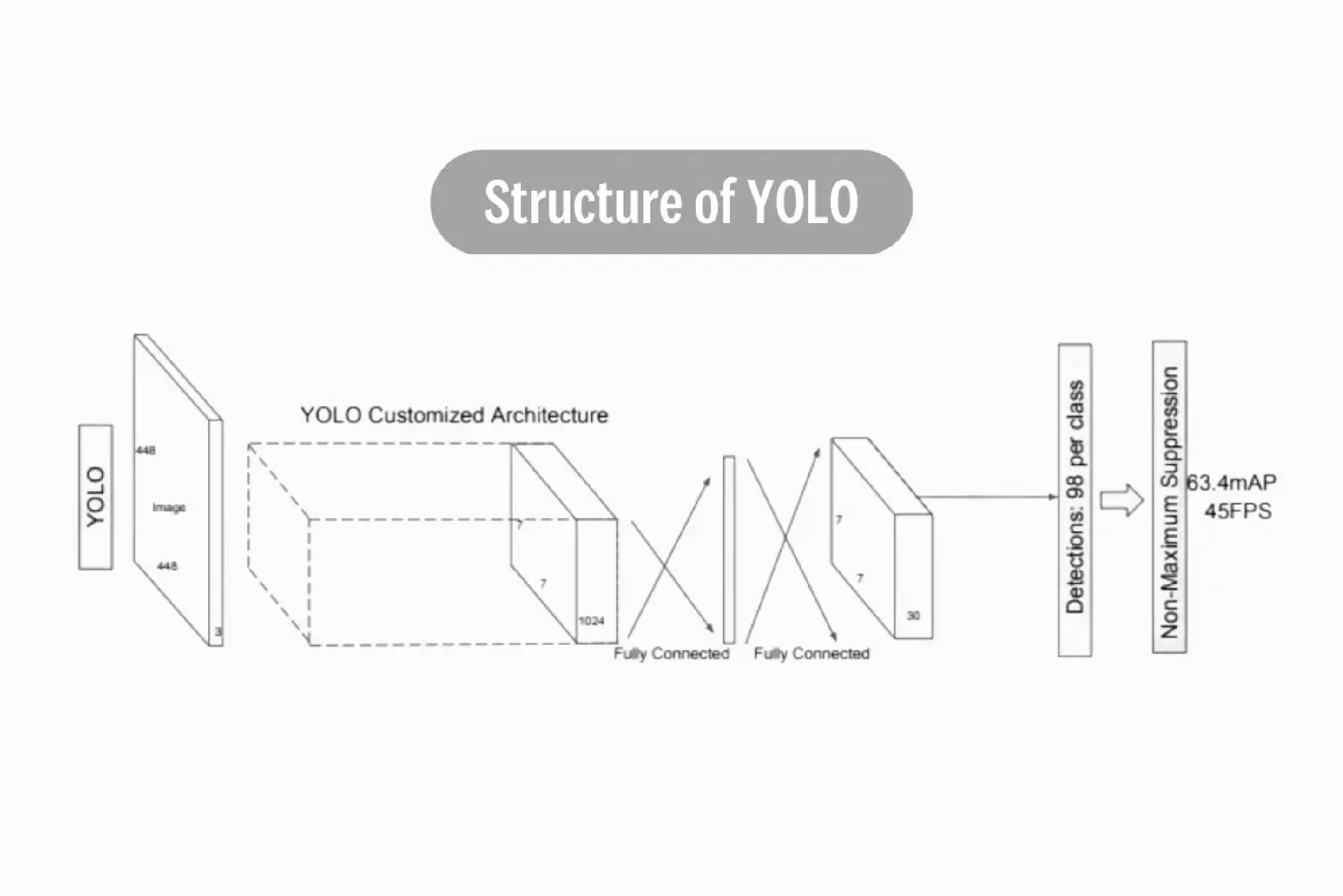

YOLO (You Only Look Once) was developed by Joseph Redmon and Ali Farhadi in 2015.11 It’s a real time object detection algorithm that uses a convolutional neural network (CNN) to predict bounding boxes and class probabilities for objects in images. YOLO processes the entire image in a single passage. First the image is divided into a grid of cells. Each of the cells predict the object presence probability, bounding box coordinates, and object class. It processes all of this in one pass through a CNN. Lastly it directly outputs the bounding box coordinates, class probabilities, and confidence scores. Since YOLO doesn’t use two stage detection it is fast and efficient making it ideal for real time object detection.

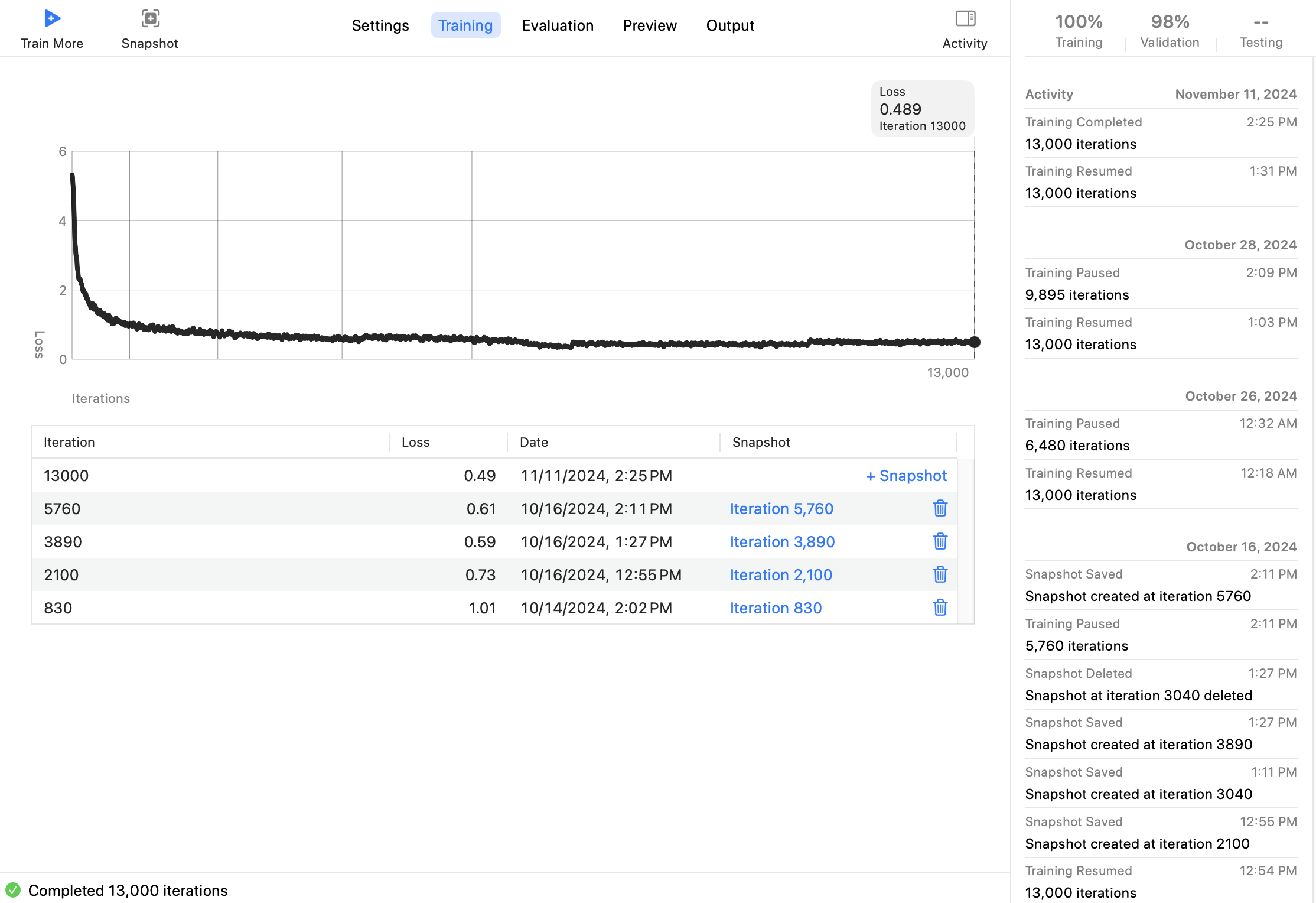

Apple Vision is a framework developed by Apple, Inc. that can perform tasks on input images and videos.12 Our use for the Framework is for the ability to track the "trajectory of an object", which would be the crosswalk signal.12 CoreML is a YOLOv5-like Framework developed by Apple, Inc. that integrates the machine learning models from Apple Vision into iOS applications. It allows all iOS devices to use one model, instead of developing efficient models for each type of device. CreateML is an application from Apple allows the ability to train an Apple Vision Model and create a CoreML file. The images and JSON file that were developed using the Mac Application RectLabel were bundled into a folder named "train" and was uploaded to CreateML. The object detection template was used and three classes were created, "Walk" and "DoNotWalk". The train button was clicked and 13,000 iterations of training were completed. The preview function within the CreateML application allows the ability to connect an iOS device to a Mac, and test the Model using the device's camera. Testing between iterations were done at iteration 830, 2,100, 3,890, and 5,760 to ensure that the machine learning model was progressing and the accuracy of crosswalk signal detections increased. When the accuracy was stable, at 98\%, the CoreML Machine Learning Model was exported.

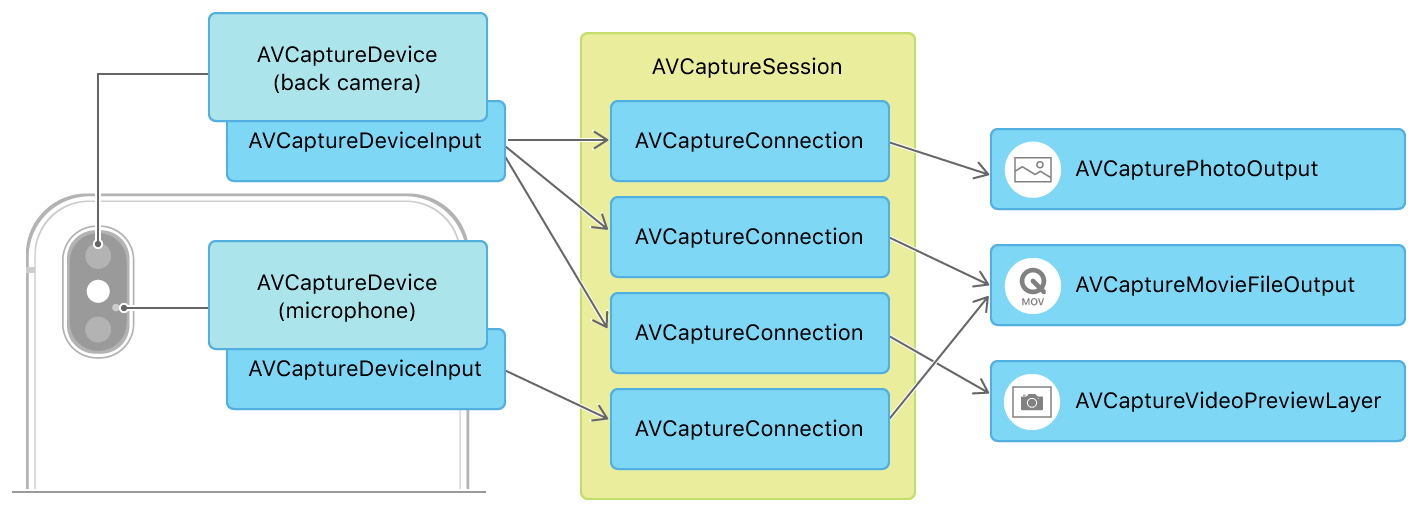

Xcode is a proprietary software development platform for building Apple applications.13 Additionally, Xcode is an environment for testing and optimizing iOS applications. A new Xcode project was created for the Apple iOS 18.0 operating system in Xcode version 16.0. The project was named "ClearCross" and the developer name was left as the username of the Mac. The "App" template was selected with the Swift language as programming language for the project. A folder named Models was created and the CoreML file was imported into the folder as CrossWalkModel for use within the app. The user's interaction with the application will be pointing their device towards the crosswalk signal, therefore the app should open with the camera view and some instructions for the user. A file named CameraView was created and in Swift was written to open a Capture Session (AVCaptureSession).14

The CrosswalkModel is loaded into the app by making it an object and creating a confidence instance property where confidence labels and values can be displayed as-is.15 When the user points the camera towards a signal light, the machine learning model will find a minimum percent confidence based on the data it was trained (98%) and then highlight the display of the camera view so that the user knows when it is safe to cross. Additionally, the app will make fast-paced audible beeps if it is safe to cross, or "Walk", or slow-paced beeps if the machine model detected a "DoNotWalk" signal. Haptic feedback will follow in the same pace as the audio. The following Swift code snippet illustrates the implementation of the main content view of the ClearCross App:

func detectionTintColor() -> Color {

switch viewModel.detectionStatus {

case .walk:

return .green

case .doNotWalk:

return .red

case .none:

return .clear

}

}

func displayStatusText() -> String {

switch viewModel.detectionStatus {

case .walk:

return "WALK"

case .doNotWalk:

return "STOP"

case .none:

return "No Signal Detected"

The ContentView file defines the user interface and for ClearCross. It provides users with real-time visual and textual feedback about the crosswalk signals detected in the camera view. The app displays clear messages such as "WALK" or "STOP." Settings were also made configurable, such as if the user wants to see the confidence score of the object detection. Additionally, the user can change the volume of the audible feedback or turn off haptic feedback.

The dataset that was used was collected from Roboflow \parencite{ROBOFLOWDS}. The file contained 1,462 "DoNotWalk" images and 1,427 "Walk" images for a total of 2,889 annotated images. The validation dataset contained 145 total images: 60 labeled as "DoNotWalk" and 85 labeled as "Walk". No additional preprocessing steps were used other than for labeling the objects using RectLabel.9

| Dataset Type | DoNotWalk Images | Walk Images | Total Images |

|---|---|---|---|

| Training | 1,462 | 1,427 | 2,889 |

| Validation | 929 | 783 | 1,712 |

The ClearCross model was trained using the YOLOv5 architecture integrated in Apple's CoreML framework, which is optimized for iOS devices. Training was conducted on the dataset containing 2,889 annotated images split with 1,712 images for validation. CoreML's capabilities achieved a training accuracy of 98\% after 13,000 iterations and a loss value of 0.49. The validation dataset, comprising of 1,712 images, was used to evaluate the model's generalization. The training focused on detecting and classifying crosswalk signals as "Walk" or "DoNotWalk." As a result of a high-quality dataset and training parameters, the model achieved a high accuracy, as represented by the low loss value, which demonstrates its readiness for deployment in real-world scenarios.

Sample 1: DoNotWalk |

Sample 2: Walk |

Sample 3: DoNotWalk |

Sample 4: DoNotWalk |

Sample 5: Walk |

Sample 6: DoNotWalk |

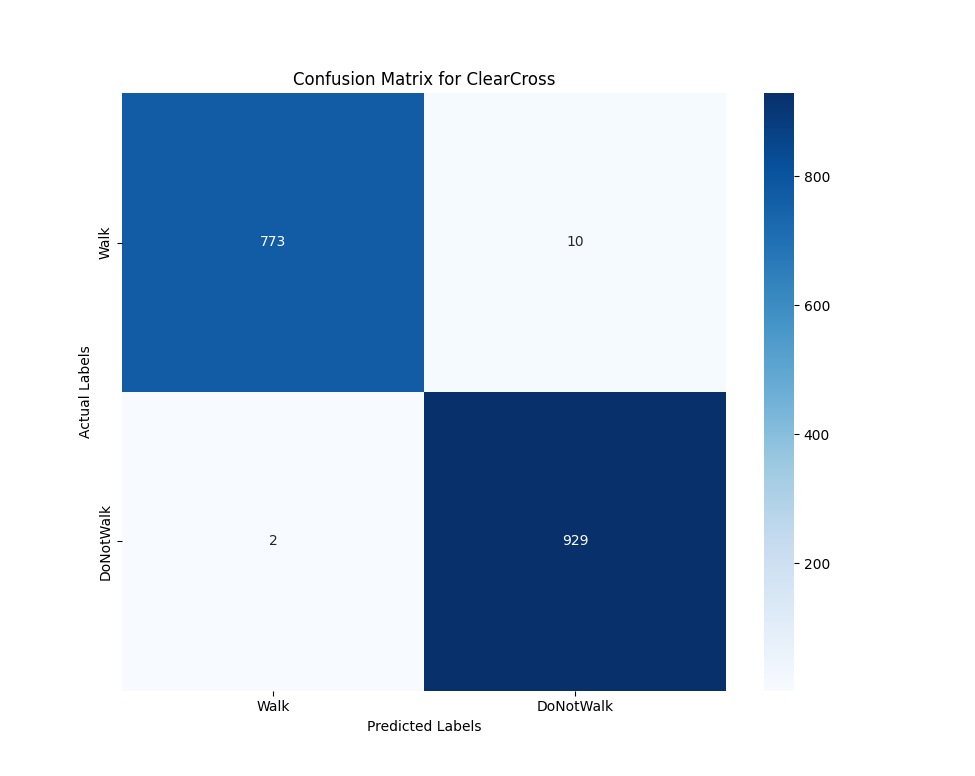

The confusion matrix provides a summary of the model's predictions on the test set, categorizing the outcomes into the true positives, true negatives, false positives, and false negatives. The metric is important for understanding the performance of the ClearCross model in detecting and classifying crosswalk signals.

The results from the confusion matrix demonstrates that the ClearCross model performs well in classifying crosswalk signals. For the "Walk" class, the model achieved a true positive rate of 773 correctly identified signals, with only 10 false negatives and 2 false positives. The "DoNotWalk" class performed even better with 929 true negatives and zero false positives or false negatives. The results from the testing data show that the model is nearly perfect, with a precision of 100\% and a recall of 99\% for the "Walk" class and 100\% precision and recall for the "DoNotWalk" class.

To evaluate the performance of ClearCross in real-world scenarios, the app was tested on an active crosswalk at Elm and College St on Yale University's campus in New Haven, Connecticut. The location was ideal as it has high pedestrian activity and has well marked streets and crosswalk signals. The test was intended to validate the app's accuracy in detecting and classifying "walk" and "DoNotWalk"signals under real conditions during the day.

The test was performed using an iPhone 14 Pro running iOS 18.1, with the ClearCeross app using the device's camera to identify the crosswalk signal. The environmental conditions during the test included bright daylight, moderate pedestrian traffic, and no hazardous or obstructive weather. The app successfully identified crosswalk signals with high accuracy and maintained its performance across multiple attempts at the crosswalk.

ClearCross represents a significant step towards addressing safety challenges faces by visually impaired pedestrians. Utilizing machine learning and real-time object detection, the app provides an accessible and portable support for crosswalk navigation. ClearCross demonstrates the potential of AI-powered mobile applications to solve challenges in underserved populations, potentially opening possibilities for assessing the project for broader applications in accessibility technology.16

Although thoroughness in the project was intended, some limitations could be found. The AuralVision dataset from Roboflow primarily contains images of crosswalk signals from the United States.8 As a result, the app's functionality may not accurately detect signals in other countries, which often have different designs and shapes. The model performs well in standard conditions, however lack of testing in adverse weather and obstructions may affect the models ability to accurately detect the crosswalk signal. Apple's CoreML model is designed to run efficiently on iOS devices, however, performance may still depend on the hardware capabilities of the device. This may mean that older devices may experience slower processing times or reduced accuracy due to hardware limitations. When a crosswalk signal is broken or malfunctioning, the app may not accurately detect the signal due to variations from the trained dataset. The app is currently only available for iOS devices. Android devices are not supported with the CoreML framework.

Future directions that may be taken with ClearCross will be expanding the dataset the model is trained on. The expanded dataset can include signals from other geographical regions to allow for global usability in addition to images in poor and obstructive weather. A core additional feature that could be added is a visual augmented reality (AR) overlay. AR could provide visual directional guidance on the camera feed, such as telling the pedestrian the distance to the end of the crosswalk. Extending the ClearCross platform to Android is indispensable to increase accessibility.

ClearCross addresses a specific safety need, enabling safe navigation for visually impaired individuals in increasingly complex urban environments. By focusing on the specific challenge of crosswalk navigation, the ClearCross application has becomes a small step toward creating assistive technologies to address accessibility gaps caused by visual impairments.